Tell you what: I'll just let the entire cat out of the bag, so you (and everybody else here) can play with the AI stuff and replicate my results with

exactly the same software and information.

I didn't want to provide a lot of detail in public about this yet, because I'm still

very ignorant of how best to prompt-engineer for perfect results, and frankly, I feel my understanding of this subject is

laughably primitive. There are probably guides online by now that explain this stuff so much better than I can. But hey, this should reassure you. I love riding the bleeding edge and I'm having fun, that's all!

To use this, you'll need a

CUDA-capable GPU, i.e, nVidia with enough VRAM (RTX Series 10+, technically; I'm using a 3080 TI).

First, install

Python and

Easy Diffusion.

Once you've installed it, run it; it'll open a web browser page. The first time, you may need to tell your OS / AV it's cool, because it does Scary Python Things and talks to the Internet and installs sub-components, etc.

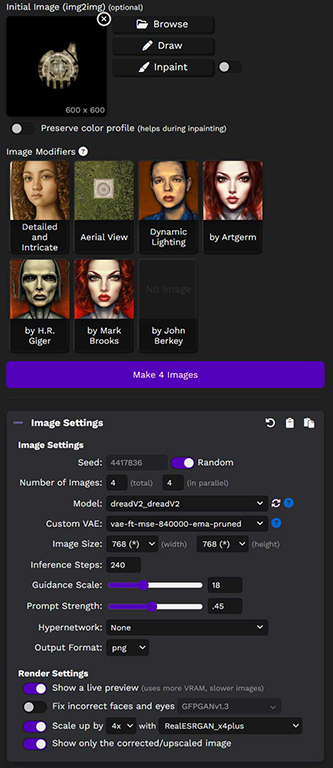

The settings I'm using, other than the text prompt part, are:

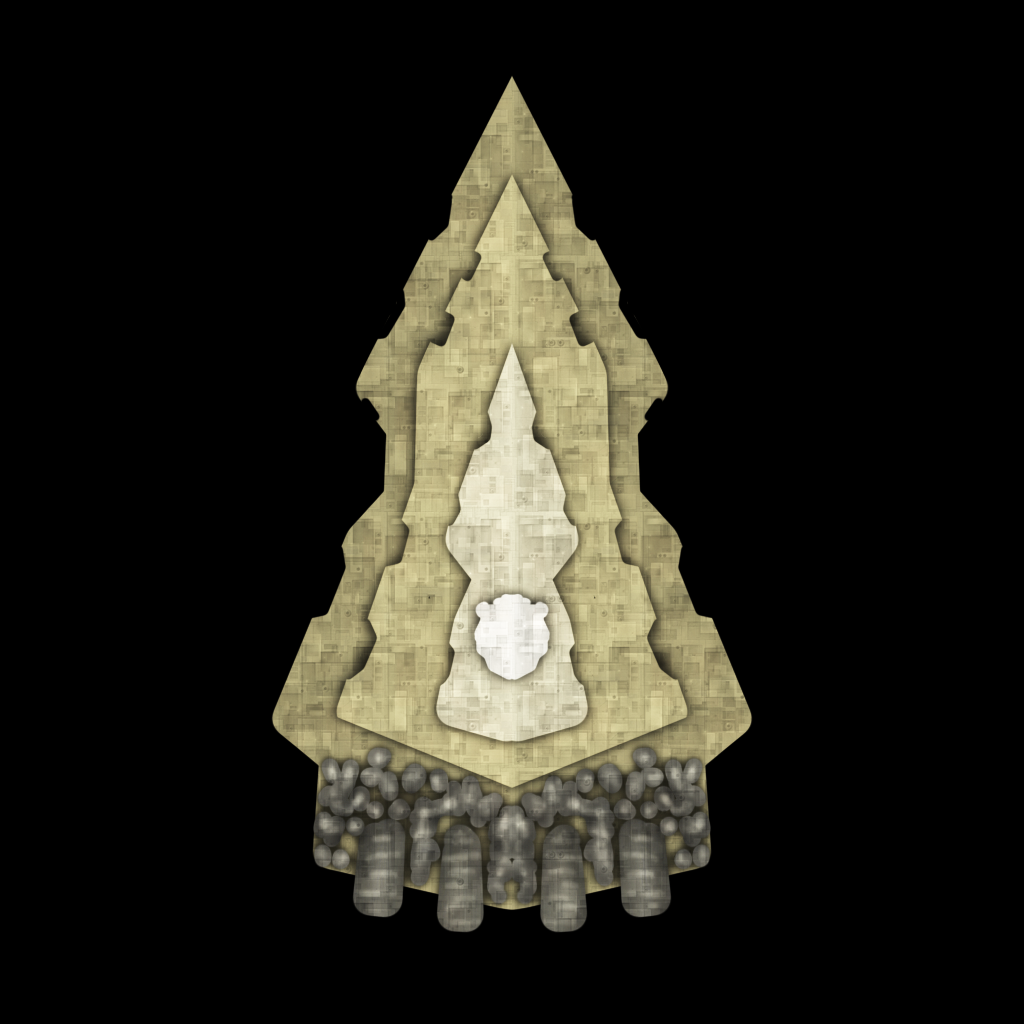

The text prompt used is: "

post-apocalyptic spaceship, [aerial view:2], panel lines, greebles, hyperdetailed, small lights, small details, dirt, grunge, damage" sans quotes.

If you're trying to replicate my results

exactly, you'll notice that dread_V2_dread_V2 isn't available on the Model dropdown tab of the UI. I don't think you need this model to get good results, but

here's where you can find that, if you're interested. I'm using that mainly for other things, but it works fine for this purpose.

The earlier stuff I've shown was using Stability AI's default model 1.4 or NightCafe's custom model.

[EDIT]

Oh, and!

Somebody, if they're trying this out for themselves with these instructions and their own art, might notice that all my starter images have weird noisy texture overlays. I've found that that (and starting with an image that's about as large as the image you intend to make, if not larger) makes much, much better images. Why? Because of how the AI's initial pixel-sampling methods work, basically. I just use a "tech" texture I have sitting around, but I'm pretty sure most forms of noisy grayscales used w/ Multiply (and some Curves to pull up white points afterwards) will work.

[/EDIT]

So there you go. No super-secret AI model stuff is required. People have been doing this kind of stuff for quite a while now; I'm just providing some basics, and have probably even given some bad advice out of sheer ignorance; but these settings have worked for me, for the kinds of things I'm doing.

Another example. Let's try and make something vaguely Midline-ish. Is that "Fadur" for 'fake Kadur' or "Midfake"? IDK, lol.

Starter image:

Some results:

Author

Topic: Spiral Arms II - Free Community Sprite Resources (Read 159775 times)

Author

Topic: Spiral Arms II - Free Community Sprite Resources (Read 159775 times)